OpenXR Hand Tracking

OpenXR中的手势一共分为两种,一种是手势tracking相关的,一种是手势动作识别相关的。

其中手势Extension相关一共有以下几种:

我们先从spec的定义上来拆解一下openxr下的hand tracking。

Spec定义

XR_EXT_hand_tracking

This extension enables applications to locate the individual joints of hand tracking inputs. It enables applications to render hands in XR experiences and interact with virtual objects using hand joints.

- locate the individual joint:获取手指关节的位置

- render hands in XR experiences and interact with virtual objects using hand joints:在应用层面可以绘制出用户的手型并且可以使用手指跟虚拟的物件进行交互。

XR_EXT_hand_joints_motion_range

This extension augments the

XR_EXT_hand_trackingextension to enable applications to request that the XrHandJointLocationsEXT returned by xrLocateHandJointsEXT should return hand joint locations conforming to a range of motion specified by the application.

- 这个扩展是作为

xrLocateHandJointsExt函数的output参数XrHandJointLocationsEXT,这个结构体中next变量的一个chain存在的,主要有两个变量:XR_HAND_JOINTS_MOTION_RANGE_UNOBSTRUCTED_EXT- 返回的手指关键数据是没有被其他物件遮挡的(裸手数据)

XR_HAND_JOINTS_MOTION_RANGE_CONFORMING_TO_CONTROLLER_EXT- 返回的数据是跟Controller一致的(手持Controller的数据)

XR_FB_hand_tracking_aim

This extension allows:

- An application to get a set of basic gesture states for the hand when using the XR_EXT_hand_tracking extension.

这个扩展是用来做一些基本的手势识别的结果返回,该扩展的结构体为

XrHandTrackingAimStateFB,实际也是作为xrLocateHandJointsExt函数的output参数XrHandJointLocationsEXT,这个结构体中next变量的一个chain存在的,除了定义aim的pose外,还有几个手指的pinch动作,以0-1.0的值域来区分,当值为1.0的时候代表该手指完全跟拇指捏合了。关键数据结构:

- 12345678910typedef struct XrHandTrackingAimStateFB {XrStructureType type;void* next;XrHandTrackingAimFlagsFB status;XrPosef aimPose;float pinchStrengthIndex;float pinchStrengthMiddle;float pinchStrengthRing;float pinchStrengthLittle;} XrHandTrackingAimStateFB;

pinchStrengthIndexis the current pinching strength for the index finger of this hand. Range is 0.0 to 1.0, with 1.0 meaning index and thumb are fully touching.pinchStrengthMiddleis the current pinching strength for the middle finger of this hand. Range is 0.0 to 1.0, with 1.0 meaning middle and thumb are fully touching.pinchStrengthRingis the current pinching strength for the ring finger of this hand. Range is 0.0 to 1.0, with 1.0 meaning ring and thumb are fully touching.pinchStrengthLittleis the current pinching strength for the little finger of this hand. Range is 0.0 to 1.0, with 1.0 meaning little and thumb are fully touching.

XR_FB_hand_tracking_capsules

This extension allows:

- An application to get a list of capsules that represent the volume of the hand when using the XR_EXT_hand_tracking extension.

- 该扩展主要是用来表述手指joint关键的一些信息,结构体为

XrHandCapsuleFB,其表征了关节的长度和半径。(看spec的描述是会用来做碰撞检测)

XR_FB_hand_tracking_mesh

This extension allows:

- An application to get a skinned hand mesh and a bind pose skeleton that can be used to render a hand object driven by the joints from the XR_EXT_hand_tracking extension.

- Control the scale of the hand joints returned by XR_EXT_hand_tracking

- 根据向unity同学们的请教和不断学习,终于是搞明白了mesh和joint的区别,实际过程中这个扩展有两个type:

XR_TYPE_HAND_TRACKING_MESH_FB以及XR_TYPE_HAND_TRACKING_SCALE_FB。- 前者

XR_TYPE_HAND_TRACKING_MESH_FB对应的结构体:XrHandTrackingMeshFB- 其结果是通过:

xrGetHandMeshFB这个函数调用返回的(这个函数也是XR_FB_hand_tracking_mesh扩展下需要实现的函数)

- 其结果是通过:

- 后者

XR_TYPE_HAND_TRACKING_SCALE_FB对应的结构体:XrHandTrackingScaleFB- 这个结构体是通过

xrLocateHandJointsExt中的next chian指代的。

- 这个结构体是通过

- 前者

XR_MSFT_hand_tracking_mesh

这个扩展有点复杂,从命名上看是微软系提供的一套hand tracking相关的函数和结构体,而给出的sample code中也是跟原本FB的不太一样,最重要的是这套扩展下的mesh是动态的。

由于oculus本身也不支持这个扩展,因此我这边也没有做额外分析,FB和MSFT只要在这两者中实现其一就可以完成hand tracking的功能了

这里可以记一个:TODO

XR_ULTRALEAP_hand_tracking_forearm

This extension extends the XrHandJointSetEXT enumeration with a new member

XR_HAND_JOINT_SET_HAND_WITH_FOREARM_ULTRALEAP. This joint set is the same as theXR_HAND_JOINT_SET_DEFAULT_EXT, plus a joint representing the user’s elbow,XR_HAND_FOREARM_JOINT_ELBOW_ULTRALEAP.

- 在原本26个关节点的基础上,额外多了一个手肘的点:

XR_HAND_FOREARM_JOINT_ELBOW_ULTRALEAP

XR_MSFT_hand_interaction

This extension defines a new interaction profile for near interactions and far interactions driven by directly-tracked hands.

- 这个扩展实际上就是我们一直在说的近场和远场的裸手交互。

在spec中,针对裸手手势的触发,其实是视为一种类似按键的特殊input,也是需要写input path:

Supported component paths:

- …/input/select/value

- …/input/squeeze/value

- …/input/aim/pose

- …/input/grip/pose

并且,针对远场和近场的手势,spec也是有严格定义的:

- 远场

The application should use the …/select/value and …/aim/pose paths for far hand interactions, such as using a virtual laser pointer to target and click a button on the wall. Here, …/select/value can be used as either a boolean or float action type, where the value

XR_TRUEor1.0frepresents a closed hand shape.

- 近场

The application should use the …/squeeze/value and …/grip/pose for near hand interactions, such as picking up a virtual object within the user’s reach from a table. Here, …/squeeze/value can be used as either a boolean or float action type, where the value

XR_TRUEor1.0frepresents a closed hand shape.

- 近场和远场并不是互斥的关系,而是需要同时识别同时响应

The runtime may trigger both “select” and “squeeze” actions for the same hand gesture if the user’s hand gesture is able to trigger both near and far interactions. The application should not assume they are as independent as two buttons on a controller.

针对这个spec,其实在手势的动作如何触发,以及上层应用要如何响应,已经是给出了明确的意思了:即通过等价action(按键)的方式,上层应用观察是否有action触发,从而做出进一步的响应动作。

XR_EXT_palm_pose

This extension defines a new “standard pose identifier” for interaction profiles, named “palm_ext”. The new identifier is a pose that can be used to place application-specific visual content such as avatar visuals that may or may not match human hands. This extension also adds a new input component path using this “palm_ext” pose identifier to existing interaction profiles when active.

The application can use the …/input/palm_ext/pose component path to place visual content representing the user’s physical hand location. Application visuals may depict, for example, realistic human hands that are very simply animated or creative depictions such as an animal, an alien, or robot limb extremity.

- 这么一段的描述,实际点出了这个扩展的本质,实际是

representing the user's physical hand location,表征物理世界中用户手的位置。

手势相关定义

通过解读spec的部分,我们可以看到手势的扩展其实是分为两个部分,一个是渲染相关的,一个是交互相关的。渲染相关的为tracking,交互相关的为recognition(XR_FB_hand_tracking_aim和XR_MSFT_hand_interaction),而在oculus上,手势的部分又有一个单独的sdk,称为Interaction SDK,这个部分需要继续结合unity来看一下。

基于欧几里得距离的手势识别

先是从B站上看到了搬运工挖来的视频,Hand Tracking Gesture Detection - Unity Oculus Quest Tutorial,这个视频的出处是油管的Valem,时间是2020年3月份,那个时候Oculus还没有出Interaction SDK(v38才引入),因此在这个视频中,Valem实际上是使用了一个比较简单的算法来计算当前的手势和“样例手势”之间的是否相同。

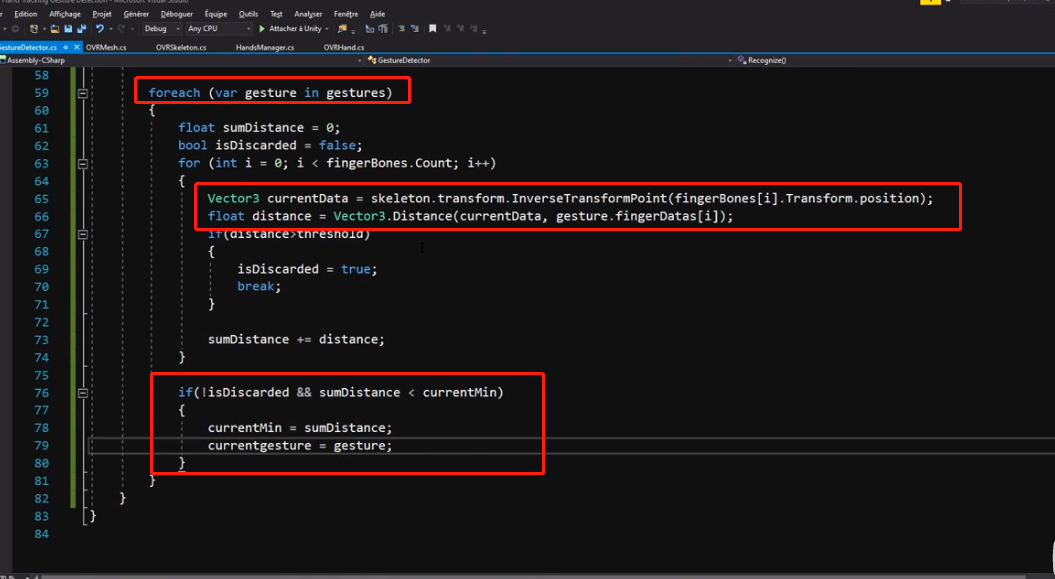

核心算法在视频11:40秒左右:

- 遍历当前所有保存的手势

- 计算保存的手势中的各个手指和当前图像中joint关键点的距离

- 如果距离大于了threshold,那么认为当前的手势不是对应的gesture

- 所有手势中距离最近的那个,就是对应的手势了

整个应用的底层逻辑是来自openxr的joint点,所以整个手势的识别,其实是一个应用层的具体业务而已。

Oculus Interaction SDK中的手势

刚开始的时候我直接从官网下载了v35的版本,导致ng,怎么样也无法找到Interation SDK的部分,折腾了很久,后来重新看官网的部分才知道Oculus Interaction SDK是从v38引入的。

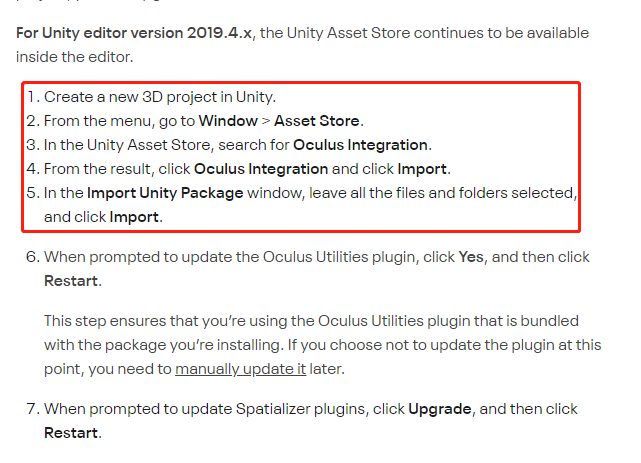

为避免后来人踩坑,一律建议直接参考unity官网的按照步骤:https://developer.oculus.com/documentation/unity/unity-import/,正确操作如下图,千万不要自作主张从Oculus官网的Download页面去下载,那里放的是旧版本的。

通过Unity的Asset Store下载到最新的Oculus Integration(截止2022/07/11,最新版本为v41)。

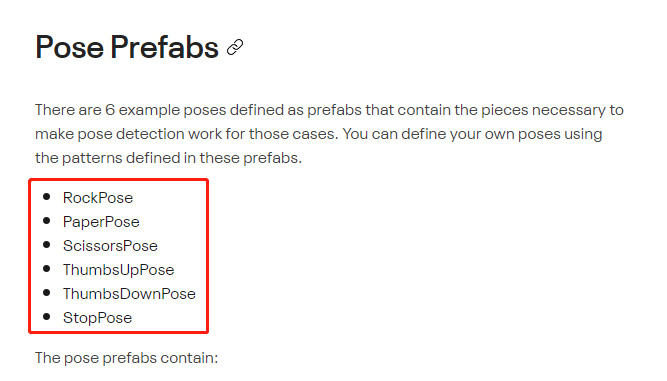

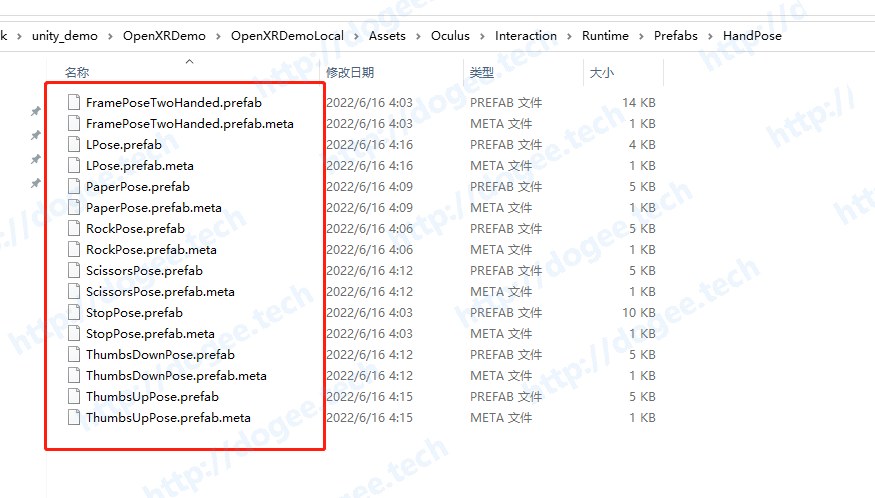

下载成功以后,就可以在当前项目的路径:Assets\Oculus\Interaction\Runtime\Prefabs\HandPose看到官网提示的6个默认手势了:

https://developer.oculus.com/documentation/unity/unity-isdk-hand-pose-detection/

- 这个部分Unity中如何使用手势来做事情,在油管也有视频介绍:Oculus Interaction SDK Hand Pose Detection With Custom Hand Poses,因此在这边就不做展开了。

以上就是在unity中两种手势的具体应用了,其中第一种《基于欧几里得距离的手势识别》是比较简单和明了的,因为我看到了具体的代码(cs文件)和joint点是怎么用的,但是第二种目前数据从哪个api过来的,还不得而知,需要再学习一下unity的部分。

- [ ] prefab后缀文件的使用方法

- [ ] unity中openxr数据flow